Tim's Window is Closed - 1000 Reasons Why

Introduction

Modelling of anything has come a long way from simple if-else rules to multi-agent workflows and even beyond with AI nowadays. In the occupant behaviour domain, simple schedules are considered one of the simplest and least detailed ways of modelling. For example, if it is after 5PM, the office is supposed to be closed — so there cannot be anybody — so the lights are off.

Either way, deterministic or stochastic, AI and ML have enabled us to add more detail to our models and seek better resolution in modelling outcomes. Especially, LLMs provide very good reasoning these days, making them great co-workers for researchers.

After working with these LLMs for some time, I realised that almost all the reasons they give in my work make sense. They are supposed to make sense anyway, if you are not strongly deterministic.

My Experiment

I did this quick experiment today to see how an LLM would reason a simple incident related to occupant behaviour, which is my domain.

I gave it some background about a hypothetical person — Tim. This included a description of his room, its physical boundaries and building sub-systems, as well as his daily routine and general lifestyle. The scenario was set at 18:00 on a cold February evening in Glasgow, with an outdoor temperature of around 3°C, dark skies, and a chance of snow (climate was identified by the LLM itself). I also provided a blackbox theoretical framework to guide the reasoning.

The model I used was Claude Sonnet 4.6 by Anthropic.

I asked it to give me a list of 1,000 reasons as to why Tim's window is closed, as observed from the outside.

Results

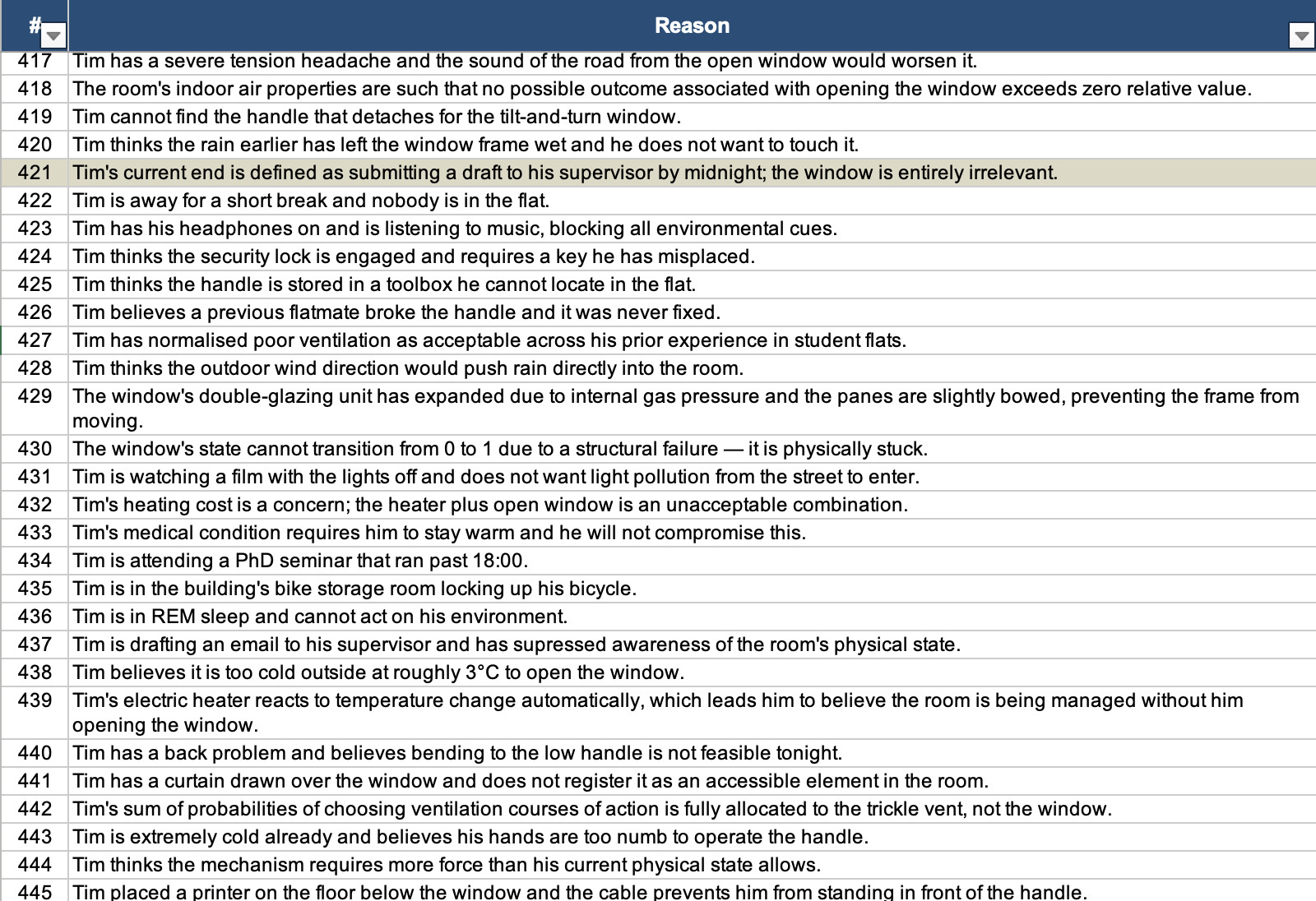

(Part of the list of 1000 reasons)

(Part of the list of 1000 reasons)

Well. It took about 15 minutes to prepare the output and provide it as an Excel file. I am sharing it as it is.

I read many of them at random, and almost all of them make sense. You will see some reasons for him simply not being present, which we could easily simplify as occupant absence. Still, the list has 1,000 reasons why, and you cannot argue that the majority is wrong — at least as someone observing from outside with very little information about Tim.

I would stand with the LLM on that.

Conclusion

There is not much more to say. You and I both know that none of us would spend time brainstorming 1,000 reasons why Tim's window is closed. And if we did, how much time and energy would we spend — or waste?

The takeaway is that with LLMs, you can run such experiments effortlessly, with very little awareness of resource consumption along the way. The results will amaze you.

But do you see any problem being solved by the 1,000 reasons we just got? Nothing.

We need purposeful and responsible use of AI. Don't we?

Resource Use

Here is what the LLM had to say about the resources consumed in this task:

Token Usage

I don't have direct access to a token counter for our conversation, but I can give you a solid estimate.

Rough token breakdown:

- Your messages + uploaded documents: ~15,000 tokens

- My responses (text): ~8,000 tokens

- The 1,000 reasons generated in the Python script (not in context, written directly to file): those were composed within a single code block, so they count as output tokens — roughly ~40,000 tokens

- System prompt + tool outputs: ~10,000 tokens

Estimated total: ~73,000 tokens (input + output combined), with the bulk being the reasons generation step.

Energy & Carbon Estimate

This is genuinely rough — the numbers vary significantly by data centre, hardware, and load.

Token energy benchmarks (from literature on large transformer models):

- A rough estimate for a model of Claude's scale is ~0.001–0.003 kWh per 1,000 tokens

- At 73,000 tokens: roughly 0.07–0.22 kWh

Carbon intensity:

- Anthropic's data centres use AWS infrastructure, which has a mixed energy profile. A reasonable estimate for US-based cloud is ~200–400 gCO₂/kWh, though Anthropic has commitments toward renewable energy which would lower this.

- At 0.07–0.22 kWh × 300 gCO₂/kWh: roughly 21–66 gCO₂

To put that in context:

- Sending roughly 10–30 emails is in a similar range

- A Google search is ~0.3 g CO₂, so this task is equivalent to roughly 70–220 Google searches

- Boiling a kettle once is ~15 g CO₂ — so this task is comparable to boiling the kettle 1–4 times